Supervised_Machine_Learning

(Regression_and_Classification)

Module - 1

Optional Lab - W1: Brief Introduction to Python and Jupyter Notebooks

Welcome to the first optional lab! Optional labs are available to: - provide information - like this notebook - reinforce lecture material with hands-on examples - provide working examples of routines used in the graded labs

Goals

In this lab, you will: - Get a brief introduction to Jupyter notebooks - Take a tour of Jupyter notebooks - Learn the difference between markdown cells and code cells - Practice some basic python

The easiest way to become familiar with Jupyter notebooks is to take the tour available above in the Help menu:

Jupyter notebooks have two types of cells that are used in this course. Cells such as this which contain documentation called Markdown Cells. The name is derived from the simple formatting language used in the cells. You will not be required to produce markdown cells. Its useful to understand the cell pulldown shown in graphic below. Occasionally, a cell will end up in the wrong mode and you may need to restore it to the right state:

The other type of cell is the code cell where you will write your code:

[ ]:

#This is a 'Code' Cell

print("This is code cell")

Python

You can write your code in the code cells. To run the code, select the cell and either - hold the shift-key down and hit ‘enter’ or ‘return’ - click the ‘run’ arrow above

Print statement

[ ]:

# print statements

variable = "right in the strings!"

print(f"f strings allow you to embed variables {variable}")

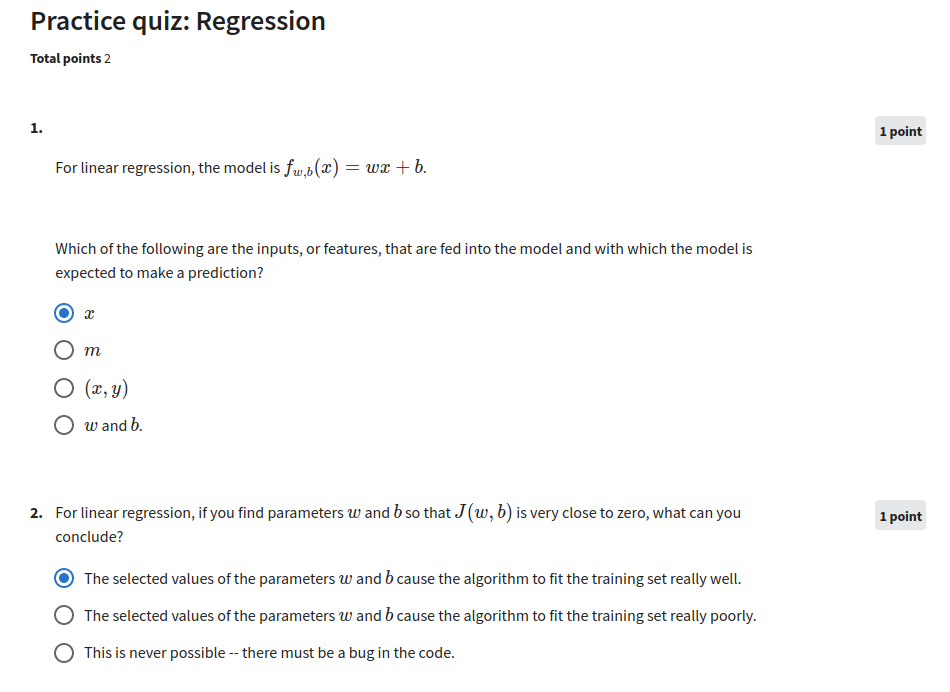

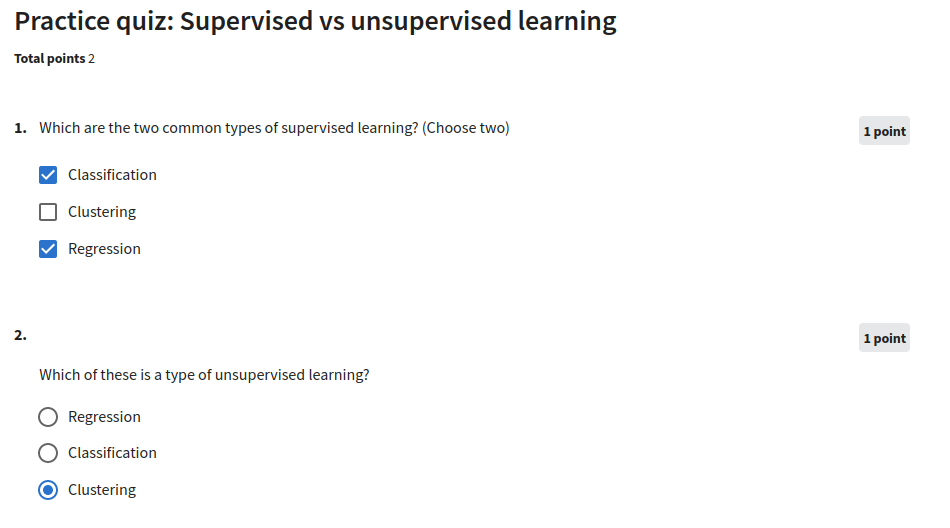

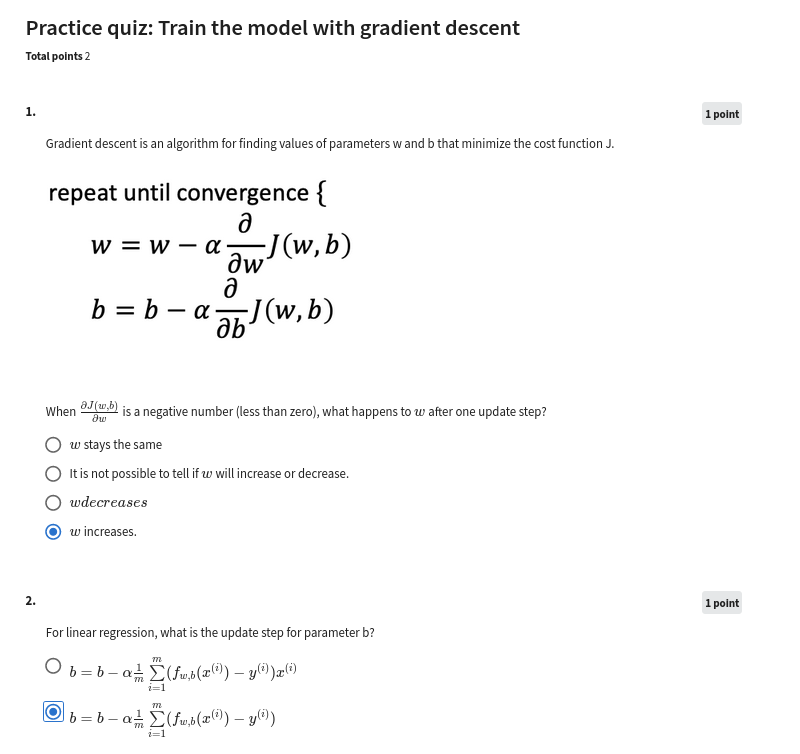

Practice Quiz

Quiz - 1

Quiz - 2

Quiz - 3

[ ]:

#ignore these lines, these are added to load some data

import sys,os

proj_path=f"{os.environ['HOME']}/my_web/Machine-Learning-Andrew-Ng"

sys.path.append(f"{proj_path}/source/source_files/Supervised_Machine_Learning_Regression_and_Classification")

sys.path.append(f"{proj_path}/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/week1")

[ ]:

sys.path.append(f"{proj_path}/source_files/Supervised_Machine_Learning_Regression_and_Classification/week2")

sys.path.append(f"{proj_path}/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/week3")

sys.path.append(f"{proj_path}/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/week2/C1W2A1")

sys.path.append(f"{proj_path}/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/week3/OptionalLabs")

sys.path.append(f"{proj_path}/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/week3/C1W3A1")

Module - 2

Optional Lab W2: Python, NumPy and Vectorization

A brief introduction to some of the scientific computing used in this course. In particular the NumPy scientific computing package and its use with python.

Outline

1.1 Goals

1.2 Useful References

2 Python and NumPy

3 Vectors

3.1 Abstract

3.2 NumPy Arrays

3.3 Vector Creation

3.4 Operations on Vectors

4 Matrices

4.1 Abstract

4.2 NumPy Arrays

4.3 Matrix Creation

4.4 Operations on Matrices

[ ]:

import numpy as np # it is an unofficial standard to use np for numpy

import time

1.1 Goals

In this lab, you will: - Review the features of NumPy and Python that are used in Course 1

1.2 Useful References

NumPy Documentation including a basic introduction: NumPy.org - A challenging feature topic: NumPy Broadcasting

2 Python and NumPy

Python is the programming language we will be using in this course. It has a set of numeric data types and arithmetic operations. NumPy is a library that extends the base capabilities of python to add a richer data set including more numeric types, vectors, matrices, and many matrix functions. NumPy and python work together fairly seamlessly. Python arithmetic operators work on NumPy data types and many NumPy functions will accept python data types.

3 Vectors

3.1 Abstract

Vectors, as you will use them in this course, are ordered arrays of numbers. In notation, vectors are denoted with lower case bold letters such as \(\mathbf{x}\). The elements of a vector are all the same type. A vector does not, for example, contain both characters and numbers. The number of elements in the array is often referred to as the dimension though mathematicians may prefer rank. The vector shown has a dimension of \(n\). The elements of a vector can be referenced with an index. In math settings, indexes typically run from 1 to n. In computer science and these labs, indexing will typically run from 0 to n-1. In notation, elements of a vector, when referenced individually will indicate the index in a subscript, for example, the \(0^{th}\) element, of the vector \(\mathbf{x}\) is \(x_0\). Note, the x is not bold in this case.

3.2 NumPy Arrays

NumPy’s basic data structure is an indexable, n-dimensional array containing elements of the same type (dtype). Right away, you may notice we have overloaded the term ‘dimension’. Above, it was the number of elements in the vector, here, dimension refers to the number of indexes of an array. A one-dimensional or 1-D array has one index. In Course 1, we will represent vectors as NumPy 1-D arrays.

1-D array, shape (n,): n elements indexed [0] through [n-1]

3.3 Vector Creation

Data creation routines in NumPy will generally have a first parameter which is the shape of the object. This can either be a single value for a 1-D result or a tuple (n,m,…) specifying the shape of the result. Below are examples of creating vectors using these routines.

[ ]:

# NumPy routines which allocate memory and fill arrays with value

a = np.zeros(4); print(f"np.zeros(4) : a = {a}, a shape = {a.shape}, a data type = {a.dtype}")

a = np.zeros((4,)); print(f"np.zeros(4,) : a = {a}, a shape = {a.shape}, a data type = {a.dtype}")

a = np.random.random_sample(4); print(f"np.random.random_sample(4): a = {a}, a shape = {a.shape}, a data type = {a.dtype}")

Some data creation routines do not take a shape tuple:

[ ]:

# NumPy routines which allocate memory and fill arrays with value but do not accept shape as input argument

a = np.arange(4.); print(f"np.arange(4.): a = {a}, a shape = {a.shape}, a data type = {a.dtype}")

a = np.random.rand(4); print(f"np.random.rand(4): a = {a}, a shape = {a.shape}, a data type = {a.dtype}")

values can be specified manually as well.

[ ]:

# NumPy routines which allocate memory and fill with user specified values

a = np.array([5,4,3,2]); print(f"np.array([5,4,3,2]): a = {a}, a shape = {a.shape}, a data type = {a.dtype}")

a = np.array([5.,4,3,2]); print(f"np.array([5.,4,3,2]): a = {a}, a shape = {a.shape}, a data type = {a.dtype}")

These have all created a one-dimensional vector a with four elements. a.shape returns the dimensions. Here we see a.shape = (4,) indicating a 1-d array with 4 elements.

3.4 Operations on Vectors

Let’s explore some operations using vectors.

3.4.1 Indexing

a[2].[ ]:

#vector indexing operations on 1-D vectors

a = np.arange(10)

print(a)

#access an element

print(f"a[2].shape: {a[2].shape} a[2] = {a[2]}, Accessing an element returns a scalar")

# access the last element, negative indexes count from the end

print(f"a[-1] = {a[-1]}")

#indexs must be within the range of the vector or they will produce and error

try:

c = a[10]

except Exception as e:

print("The error message you'll see is:")

print(e)

3.4.2 Slicing

Slicing creates an array of indices using a set of three values (start:stop:step). A subset of values is also valid. Its use is best explained by example:

[ ]:

#vector slicing operations

a = np.arange(10)

print(f"a = {a}")

#access 5 consecutive elements (start:stop:step)

c = a[2:7:1]; print("a[2:7:1] = ", c)

# access 3 elements separated by two

c = a[2:7:2]; print("a[2:7:2] = ", c)

# access all elements index 3 and above

c = a[3:]; print("a[3:] = ", c)

# access all elements below index 3

c = a[:3]; print("a[:3] = ", c)

# access all elements

c = a[:]; print("a[:] = ", c)

3.4.3 Single vector operations

There are a number of useful operations that involve operations on a single vector.

[ ]:

a = np.array([1,2,3,4])

print(f"a : {a}")

# negate elements of a

b = -a

print(f"b = -a : {b}")

# sum all elements of a, returns a scalar

b = np.sum(a)

print(f"b = np.sum(a) : {b}")

b = np.mean(a)

print(f"b = np.mean(a): {b}")

b = a**2

print(f"b = a**2 : {b}")

3.4.4 Vector Vector element-wise operations

Most of the NumPy arithmetic, logical and comparison operations apply to vectors as well. These operators work on an element-by-element basis. For example

[ ]:

a = np.array([ 1, 2, 3, 4])

b = np.array([-1,-2, 3, 4])

print(f"Binary operators work element wise: {a + b}")

Of course, for this to work correctly, the vectors must be of the same size:

[ ]:

#try a mismatched vector operation

c = np.array([1, 2])

try:

d = a + c

except Exception as e:

print("The error message you'll see is:")

print(e)

3.4.5 Scalar Vector operations

Vectors can be ‘scaled’ by scalar values. A scalar value is just a number. The scalar multiplies all the elements of the vector.

[ ]:

a = np.array([1, 2, 3, 4])

# multiply a by a scalar

b = 5 * a

print(f"b = 5 * a : {b}")

3.4.6 Vector Vector dot product

The dot product is a mainstay of Linear Algebra and NumPy. This is an operation used extensively in this course and should be well understood. The dot product is shown below.

The dot product multiplies the values in two vectors element-wise and then sums the result. Vector dot product requires the dimensions of the two vectors to be the same.

Let’s implement our own version of the dot product below:

Using a for loop, implement a function which returns the dot product of two vectors. The function to return given inputs \(a\) and \(b\):

Assume both a and b are the same shape.

[ ]:

def my_dot(a, b):

"""

Compute the dot product of two vectors

Args:

a (ndarray (n,)): input vector

b (ndarray (n,)): input vector with same dimension as a

Returns:

x (scalar):

"""

x=0

for i in range(a.shape[0]):

x = x + a[i] * b[i]

return x

[ ]:

# test 1-D

a = np.array([1, 2, 3, 4])

b = np.array([-1, 4, 3, 2])

print(f"my_dot(a, b) = {my_dot(a, b)}")

Note, the dot product is expected to return a scalar value.

Let’s try the same operations using np.dot.

[ ]:

# test 1-D

a = np.array([1, 2, 3, 4])

b = np.array([-1, 4, 3, 2])

c = np.dot(a, b)

print(f"NumPy 1-D np.dot(a, b) = {c}, np.dot(a, b).shape = {c.shape} ")

c = np.dot(b, a)

print(f"NumPy 1-D np.dot(b, a) = {c}, np.dot(a, b).shape = {c.shape} ")

Above, you will note that the results for 1-D matched our implementation.

3.4.7 The Need for Speed: vector vs for loop

We utilized the NumPy library because it improves speed memory efficiency. Let’s demonstrate:

[ ]:

np.random.seed(1)

a = np.random.rand(10000000) # very large arrays

b = np.random.rand(10000000)

tic = time.time() # capture start time

c = np.dot(a, b)

toc = time.time() # capture end time

print(f"np.dot(a, b) = {c:.4f}")

print(f"Vectorized version duration: {1000*(toc-tic):.4f} ms ")

tic = time.time() # capture start time

c = my_dot(a,b)

toc = time.time() # capture end time

print(f"my_dot(a, b) = {c:.4f}")

print(f"loop version duration: {1000*(toc-tic):.4f} ms ")

del(a);del(b) #remove these big arrays from memory

So, vectorization provides a large speed up in this example. This is because NumPy makes better use of available data parallelism in the underlying hardware. GPU’s and modern CPU’s implement Single Instruction, Multiple Data (SIMD) pipelines allowing multiple operations to be issued in parallel. This is critical in Machine Learning where the data sets are often very large.

3.4.8 Vector Vector operations in Course 1

Vector Vector operations will appear frequently in course 1. Here is why: - Going forward, our examples will be stored in an array, X_train of dimension (m,n). This will be explained more in context, but here it is important to note it is a 2 Dimensional array or matrix (see next section on matrices). - w will be a 1-dimensional vector of shape (n,). - we will perform operations by looping through the examples, extracting each example to work on individually by indexing X. For

example:X[i] - X[i] returns a value of shape (n,), a 1-dimensional vector. Consequently, operations involving X[i] are often vector-vector.

That is a somewhat lengthy explanation, but aligning and understanding the shapes of your operands is important when performing vector operations.

[ ]:

# show common Course 1 example

X = np.array([[1],[2],[3],[4]])

w = np.array([2])

c = np.dot(X[1], w)

print(f"X[1] has shape {X[1].shape}")

print(f"w has shape {w.shape}")

print(f"c has shape {c.shape}")

4 Matrices

4.1 Abstract

Matrices, are two dimensional arrays. The elements of a matrix are all of the same type. In notation, matrices are denoted with capitol, bold letter such as \(\mathbf{X}\). In this and other labs, m is often the number of rows and n the number of columns. The elements of a matrix can be referenced with a two dimensional index. In math settings, numbers in the index typically run from 1 to n. In computer science and these labs, indexing will run from 0 to n-1.

Generic Matrix Notation, 1st index is row, 2nd is column

4.2 NumPy Arrays

NumPy’s basic data structure is an indexable, n-dimensional array containing elements of the same type (dtype). These were described earlier. Matrices have a two-dimensional (2-D) index [m,n].

In Course 1, 2-D matrices are used to hold training data. Training data is \(m\) examples by \(n\) features creating an (m,n) array. Course 1 does not do operations directly on matrices but typically extracts an example as a vector and operates on that. Below you will review: - data creation - slicing and indexing

4.3 Matrix Creation

The same functions that created 1-D vectors will create 2-D or n-D arrays. Here are some examples

Below, the shape tuple is provided to achieve a 2-D result. Notice how NumPy uses brackets to denote each dimension. Notice further than NumPy, when printing, will print one row per line.

[ ]:

a = np.zeros((1, 5))

print(f"a shape = {a.shape}, a = {a}")

a = np.zeros((2, 1))

print(f"a shape = {a.shape}, a = {a}")

a = np.random.random_sample((1, 1))

print(f"a shape = {a.shape}, a = {a}")

One can also manually specify data. Dimensions are specified with additional brackets matching the format in the printing above.

[ ]:

# NumPy routines which allocate memory and fill with user specified values

a = np.array([[5], [4], [3]]); print(f" a shape = {a.shape}, np.array: a = {a}")

a = np.array([[5], # One can also

[4], # separate values

[3]]); #into separate rows

print(f" a shape = {a.shape}, np.array: a = {a}")

4.4 Operations on Matrices

Let’s explore some operations using matrices.

4.4.1 Indexing

Matrices include a second index. The two indexes describe [row, column]. Access can either return an element or a row/column. See below:

[ ]:

#vector indexing operations on matrices

a = np.arange(6).reshape(-1, 2) #reshape is a convenient way to create matrices

print(f"a.shape: {a.shape}, \na= {a}")

#access an element

print(f"\na[2,0].shape: {a[2, 0].shape}, a[2,0] = {a[2, 0]}, type(a[2,0]) = {type(a[2, 0])} Accessing an element returns a scalar\n")

#access a row

print(f"a[2].shape: {a[2].shape}, a[2] = {a[2]}, type(a[2]) = {type(a[2])}")

It is worth drawing attention to the last example. Accessing a matrix by just specifying the row will return a 1-D vector.

a = np.arange(6).reshape(-1, 2)a = np.arange(6).reshape(3, 2)4.4.2 Slicing

Slicing creates an array of indices using a set of three values (start:stop:step). A subset of values is also valid. Its use is best explained by example:

[ ]:

#vector 2-D slicing operations

a = np.arange(20).reshape(-1, 10)

print(f"a = \n{a}")

#access 5 consecutive elements (start:stop:step)

print("a[0, 2:7:1] = ", a[0, 2:7:1], ", a[0, 2:7:1].shape =", a[0, 2:7:1].shape, "a 1-D array")

#access 5 consecutive elements (start:stop:step) in two rows

print("a[:, 2:7:1] = \n", a[:, 2:7:1], ", a[:, 2:7:1].shape =", a[:, 2:7:1].shape, "a 2-D array")

# access all elements

print("a[:,:] = \n", a[:,:], ", a[:,:].shape =", a[:,:].shape)

# access all elements in one row (very common usage)

print("a[1,:] = ", a[1,:], ", a[1,:].shape =", a[1,:].shape, "a 1-D array")

# same as

print("a[1] = ", a[1], ", a[1].shape =", a[1].shape, "a 1-D array")

Practice Quiz

Quiz-1

Quiz-2

Quiz-3

Assignment W2:

Practice Lab: Linear Regression

Welcome to your first practice lab! In this lab, you will implement linear regression with one variable to predict profits for a restaurant franchise.

Outline

1 - Packages

2 - Linear regression with one variable

2.1 Problem Statement

3 Dataset

4 Refresher on linear regression

5 Compute Cost

Exercise 1

6 Gradient descent

Exercise 2

6.1 Learning parameters using batch gradient descent

1 - Packages

First, let’s run the cell below to import all the packages that you will need during this assignment. - numpy is the fundamental package for working with matrices in Python. - matplotlib is a famous library to plot graphs in Python. - utils.py contains helper functions for this assignment. You do not need to modify code in this file.

[ ]:

import sys

#add modules from the path

sys.path.append("/home/amitk/my_web/Machine-Learning-Andrew-Ng/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/week2/C1W2A1")

import numpy as np

import matplotlib.pyplot as plt

from utils import *

import copy

import math

%matplotlib inline

#to show graphs inline

2 - Problem Statement

Suppose you are the CEO of a restaurant franchise and are considering different cities for opening a new outlet. - You would like to expand your business to cities that may give your restaurant higher profits. - The chain already has restaurants in various cities and you have data for profits and populations from the cities. - You also have data on cities that are candidates for a new restaurant. - For these cities, you have the city population.

Can you use the data to help you identify which cities may potentially give your business higher profits?

3 - Dataset

load_data() function shown below loads the data into variables x_train and y_train - x_train is the population of a city - y_train is the profit of a restaurant in that city. A negative value for profit indicates a loss.X_train and y_train are numpy arrays.[ ]:

# load the dataset

x_train, y_train = load_data()

View the variables

The code below prints the variable x_train and the type of the variable.

[ ]:

# print x_train

print("Type of x_train:",type(x_train))

print("First five elements of x_train are:\n", x_train[:5])

x_train is a numpy array that contains decimal values that are all greater than zero. - These values represent the city population times 10,000 - For example, 6.1101 means that the population for that city is 61,101

Now, let’s print y_train

[ ]:

# print y_train

print("Type of y_train:",type(y_train))

print("First five elements of y_train are:\n", y_train[:5])

Similarly, y_train is a numpy array that has decimal values, some negative, some positive. - These represent your restaurant’s average monthly profits in each city, in units of $10,000. - For example, 17.592 represents $175,920 in average monthly profits for that city. - -2.6807 represents -$26,807 in average monthly loss for that city.

Check the dimensions of your variables

Another useful way to get familiar with your data is to view its dimensions.

Please print the shape of x_train and y_train and see how many training examples you have in your dataset.

[ ]:

print ('The shape of x_train is:', x_train.shape)

print ('The shape of y_train is: ', y_train.shape)

print ('Number of training examples (m):', len(x_train))

The city population array has 97 data points, and the monthly average profits also has 97 data points. These are NumPy 1D arrays.

Visualize your data

It is often useful to understand the data by visualizing it. - For this dataset, you can use a scatter plot to visualize the data, since it has only two properties to plot (profit and population). - Many other problems that you will encounter in real life have more than two properties (for example, population, average household income, monthly profits, monthly sales).When you have more than two properties, you can still use a scatter plot to see the relationship between each pair of properties.

[ ]:

# Create a scatter plot of the data. To change the markers to red "x",

# we used the 'marker' and 'c' parameters

plt.scatter(x_train, y_train, marker='x', c='r')

# Set the title

plt.title("Profits vs. Population per city")

# Set the y-axis label

plt.ylabel('Profit in $10,000')

# Set the x-axis label

plt.xlabel('Population of City in 10,000s')

plt.show()

Your goal is to build a linear regression model to fit this data. - With this model, you can then input a new city’s population, and have the model estimate your restaurant’s potential monthly profits for that city.

4 - Refresher on linear regression

In this practice lab, you will fit the linear regression parameters \((w,b)\) to your dataset. - The model function for linear regression, which is a function that maps from x (city population) to y (your restaurant’s monthly profit for that city) is represented as

To train a linear regression model, you want to find the best \((w,b)\) parameters that fit your dataset.

To compare how one choice of \((w,b)\) is better or worse than another choice, you can evaluate it with a cost function \(J(w,b)\)

\(J\) is a function of \((w,b)\). That is, the value of the cost \(J(w,b)\) depends on the value of \((w,b)\).

The choice of \((w,b)\) that fits your data the best is the one that has the smallest cost \(J(w,b)\).

To find the values \((w,b)\) that gets the smallest possible cost \(J(w,b)\), you can use a method called gradient descent.

With each step of gradient descent, your parameters \((w,b)\) come closer to the optimal values that will achieve the lowest cost \(J(w,b)\).

The trained linear regression model can then take the input feature \(x\) (city population) and output a prediction \(f_{w,b}(x)\) (predicted monthly profit for a restaurant in that city).

5 - Compute Cost

Gradient descent involves repeated steps to adjust the value of your parameter \((w,b)\) to gradually get a smaller and smaller cost \(J(w,b)\). - At each step of gradient descent, it will be helpful for you to monitor your progress by computing the cost \(J(w,b)\) as \((w,b)\) gets updated. - In this section, you will implement a function to calculate \(J(w,b)\) so that you can check the progress of your gradient descent implementation.

Cost function

As you may recall from the lecture, for one variable, the cost function for linear regression \(J(w,b)\) is defined as

You can think of \(f_{w,b}(x^{(i)})\) as the model’s prediction of your restaurant’s profit, as opposed to \(y^{(i)}\), which is the actual profit that is recorded in the data.

\(m\) is the number of training examples in the dataset

Model prediction

For linear regression with one variable, the prediction of the model \(f_{w,b}\) for an example \(x^{(i)}\) is representented as:

This is the equation for a line, with an intercept \(b\) and a slope \(w\)

Implementation

Please complete the compute_cost() function below to compute the cost \(J(w,b)\).

Exercise 1

Complete the compute_cost below to:

Iterate over the training examples, and for each example, compute:

The prediction of the model for that example

\[f_{wb}(x^{(i)}) = wx^{(i)} + b\]The cost for that example

\[cost^{(i)} = (f_{wb} - y^{(i)})^2\]

Return the total cost over all examples

\[J(\mathbf{w},b) = \frac{1}{2m} \sum\limits_{i = 0}^{m-1} cost^{(i)}\]Here, \(m\) is the number of training examples and \(\sum\) is the summation operator

If you get stuck, you can check out the hints presented after the cell below to help you with the implementation.

[ ]:

# UNQ_C1

# GRADED FUNCTION: compute_cost

def compute_cost(x, y, w, b):

"""

Computes the cost function for linear regression.

Args:

x (ndarray): Shape (m,) Input to the model (Population of cities)

y (ndarray): Shape (m,) Label (Actual profits for the cities)

w, b (scalar): Parameters of the model

Returns

total_cost (float): The cost of using w,b as the parameters for linear regression

to fit the data points in x and y

"""

# number of training examples

m = x.shape[0]

# You need to return this variable correctly

total_cost = 0

### START CODE HERE ###

cost=0

for i in range(m):

f_wb = w*x[i]+b

cost += (f_wb - y[i])**2

total_cost = cost/(2*m)

### END CODE HERE ###

return total_cost

Click for hints

You can represent a summation operator eg: \(h = \sum\limits_{i = 0}^{m-1} 2i\) in code as follows:

h = 0

for i in range(m):

h = h + 2*i

In this case, you can iterate over all the examples in

xusing a for loop and add thecostfrom each iteration to a variable (cost_sum) initialized outside the loop.Then, you can return the

total_costascost_sumdivided by2m.

Click for more hints

Here’s how you can structure the overall implementation for this function

def compute_cost(x, y, w, b): # number of training examples m = x.shape[0] # You need to return this variable correctly total_cost = 0 ### START CODE HERE ### # Variable to keep track of sum of cost from each example cost_sum = 0 # Loop over training examples for i in range(m): # Your code here to get the prediction f_wb for the ith example f_wb = # Your code here to get the cost associated with the ith example cost = # Add to sum of cost for each example cost_sum = cost_sum + cost # Get the total cost as the sum divided by (2*m) total_cost = (1 / (2 * m)) * cost_sum ### END CODE HERE ### return total_cost

If you’re still stuck, you can check the hints presented below to figure out how to calculate

f_wbandcost.

Hint to calculate f_wb For scalars \(a\), \(b\) and \(c\) (x[i], w and b are all scalars), you can calculate the equation \(h = ab + c\) in code as h = a * b + c

More hints to calculate f You can compute f_wb as f_wb = w * x[i] + b

Hint to calculate cost You can calculate the square of a variable z as z**2

More hints to calculate cost You can compute cost as cost = (f_wb - y[i]) ** 2

You can check if your implementation was correct by running the following test code:

[ ]:

# Compute cost with some initial values for paramaters w, b

initial_w = 2

initial_b = 1

cost = compute_cost(x_train, y_train, initial_w, initial_b)

print(type(cost))

print(f'Cost at initial w (zeros): {cost:.3f}')

# Public tests

from public_tests import *

compute_cost_test(compute_cost)

Expected Output:

Cost at initial w (zeros): 75.203 |

6 - Gradient descent

In this section, you will implement the gradient for parameters \(w, b\) for linear regression.

As described in the lecture videos, the gradient descent algorithm is:

\[\frac{\partial J(w,b)}{\partial b} = \frac{1}{m} \sum\limits_{i = 0}^{m-1} (f_{w,b}(x^{(i)}) - y^{(i)}) \tag{2}\]\[\frac{\partial J(w,b)}{\partial w} = \frac{1}{m} \sum\limits_{i = 0}^{m-1} (f_{w,b}(x^{(i)}) -y^{(i)})x^{(i)} \tag{3}\]* m is the number of training examples in the dataset

\(f_{w,b}(x^{(i)})\) is the model’s prediction, while \(y^{(i)}\), is the target value

You will implement a function called compute_gradient which calculates \(\frac{\partial J(w)}{\partial w}\), \(\frac{\partial J(w)}{\partial b}\)

Exercise 2

Please complete the compute_gradient function to:

Iterate over the training examples, and for each example, compute:

The prediction of the model for that example

\[f_{wb}(x^{(i)}) = wx^{(i)} + b\]The gradient for the parameters \(w, b\) from that example

\[\frac{\partial J(w,b)}{\partial b}^{(i)} = (f_{w,b}(x^{(i)}) - y^{(i)})\]\[\frac{\partial J(w,b)}{\partial w}^{(i)} = (f_{w,b}(x^{(i)}) -y^{(i)})x^{(i)}\]

Return the total gradient update from all the examples

\[\frac{\partial J(w,b)}{\partial b} = \frac{1}{m} \sum\limits_{i = 0}^{m-1} \frac{\partial J(w,b)}{\partial b}^{(i)}\]\[\frac{\partial J(w,b)}{\partial w} = \frac{1}{m} \sum\limits_{i = 0}^{m-1} \frac{\partial J(w,b)}{\partial w}^{(i)}\]Here, \(m\) is the number of training examples and \(\sum\) is the summation operator

If you get stuck, you can check out the hints presented after the cell below to help you with the implementation.

[ ]:

# UNQ_C2

# GRADED FUNCTION: compute_gradient

def compute_gradient(x, y, w, b):

"""

Computes the gradient for linear regression

Args:

x (ndarray): Shape (m,) Input to the model (Population of cities)

y (ndarray): Shape (m,) Label (Actual profits for the cities)

w, b (scalar): Parameters of the model

Returns

dj_dw (scalar): The gradient of the cost w.r.t. the parameters w

dj_db (scalar): The gradient of the cost w.r.t. the parameter b

"""

# Number of training examples

m = x.shape[0]

# You need to return the following variables correctly

dj_dw = 0

dj_db = 0

### START CODE HERE ###

for i in range(m):

f_wb = w*x[i]+b

dj_db += f_wb - y[i]

dj_dw += (f_wb - y[i])*x[i]

dj_dw /= m

dj_db /= m

### END CODE HERE ###

return dj_dw, dj_db

Click for hints

You can represent a summation operator eg: \(h = \sum\limits_{i = 0}^{m-1} 2i\) in code as follows:

python h = 0 for i in range(m): h = h + 2*iIn this case, you can iterate over all the examples in

xusing a for loop and for each example, keep adding the gradient from that example to the variablesdj_dwanddj_dbwhich are initialized outside the loop.

- Then, you can return

dj_dwanddj_dbboth divided bym.Click for more hints

Here’s how you can structure the overall implementation for this function

def compute_gradient(x, y, w, b): """ Computes the gradient for linear regression Args: x (ndarray): Shape (m,) Input to the model (Population of cities) y (ndarray): Shape (m,) Label (Actual profits for the cities) w, b (scalar): Parameters of the model Returns dj_dw (scalar): The gradient of the cost w.r.t. the parameters w dj_db (scalar): The gradient of the cost w.r.t. the parameter b """ # Number of training examples m = x.shape[0] # You need to return the following variables correctly dj_dw = 0 dj_db = 0 ### START CODE HERE ### # Loop over examples for i in range(m): # Your code here to get prediction f_wb for the ith example f_wb = # Your code here to get the gradient for w from the ith example dj_dw_i = # Your code here to get the gradient for b from the ith example dj_db_i = # Update dj_db : In Python, a += 1 is the same as a = a + 1 dj_db += dj_db_i # Update dj_dw dj_dw += dj_dw_i # Divide both dj_dw and dj_db by m dj_dw = dj_dw / m dj_db = dj_db / m ### END CODE HERE ### return dj_dw, dj_db

If you’re still stuck, you can check the hints presented below to figure out how to calculate

f_wbandcost.Hint to calculate f_wb You did this in the previous exercise! For scalars \(a\), \(b\) and \(c\) (x[i], w and b are all scalars), you can calculate the equation \(h = ab + c\) in code as h = a * b + c

More hints to calculate f You can compute f_wb as f_wb = w * x[i] + b

Hint to calculate dj_dw_i For scalars \(a\), \(b\) and \(c\) (f_wb, y[i] and x[i] are all scalars), you can calculate the equation \(h = (a - b)c\) in code as h = (a-b)*c

More hints to calculate f You can compute dj_dw_i as dj_dw_i = (f_wb - y[i]) * x[i]

Hint to calculate dj_db_i You can compute dj_db_i as dj_db_i = f_wb - y[i]

Run the cells below to check your implementation of the compute_gradient function with two different initializations of the parameters \(w\),\(b\).

[ ]:

# Compute and display gradient with w initialized to zeroes

initial_w = 0

initial_b = 0

tmp_dj_dw, tmp_dj_db = compute_gradient(x_train, y_train, initial_w, initial_b)

print('Gradient at initial w, b (zeros):', tmp_dj_dw, tmp_dj_db)

compute_gradient_test(compute_gradient)

Now let’s run the gradient descent algorithm implemented above on our dataset.

Expected Output:

Gradient at initial , b (zeros) | -65.32884975 -5.83913505154639 |

[ ]:

# Compute and display cost and gradient with non-zero w

test_w = 0.2

test_b = 0.2

tmp_dj_dw, tmp_dj_db = compute_gradient(x_train, y_train, test_w, test_b)

print('Gradient at test w, b:', tmp_dj_dw, tmp_dj_db)

Expected Output:

Gradient at test w | -47.41610118 -4.007175051546391 |

6.1 Learning parameters using batch gradient descent

You will now find the optimal parameters of a linear regression model by using batch gradient descent. Recall batch refers to running all the examples in one iteration. - You don’t need to implement anything for this part. Simply run the cells below.

A good way to verify that gradient descent is working correctly is to look at the value of \(J(w,b)\) and check that it is decreasing with each step.

Assuming you have implemented the gradient and computed the cost correctly and you have an appropriate value for the learning rate alpha, \(J(w,b)\) should never increase and should converge to a steady value by the end of the algorithm.

[ ]:

def gradient_descent(x, y, w_in, b_in, cost_function, gradient_function, alpha, num_iters):

"""

Performs batch gradient descent to learn theta. Updates theta by taking

num_iters gradient steps with learning rate alpha

Args:

x : (ndarray): Shape (m,)

y : (ndarray): Shape (m,)

w_in, b_in : (scalar) Initial values of parameters of the model

cost_function: function to compute cost

gradient_function: function to compute the gradient

alpha : (float) Learning rate

num_iters : (int) number of iterations to run gradient descent

Returns

w : (ndarray): Shape (1,) Updated values of parameters of the model after

running gradient descent

b : (scalar) Updated value of parameter of the model after

running gradient descent

"""

# number of training examples

m = len(x)

# An array to store cost J and w's at each iteration — primarily for graphing later

J_history = []

w_history = []

w = copy.deepcopy(w_in) #avoid modifying global w within function

b = b_in

for i in range(num_iters):

# Calculate the gradient and update the parameters

dj_dw, dj_db = gradient_function(x, y, w, b )

# Update Parameters using w, b, alpha and gradient

w = w - alpha * dj_dw

b = b - alpha * dj_db

# Save cost J at each iteration

if i<100000: # prevent resource exhaustion

cost = cost_function(x, y, w, b)

J_history.append(cost)

# Print cost every at intervals 10 times or as many iterations if < 10

if i% math.ceil(num_iters/10) == 0:

w_history.append(w)

print(f"Iteration {i:4}: Cost {float(J_history[-1]):8.2f} ")

return w, b, J_history, w_history #return w and J,w history for graphing

Now let’s run the gradient descent algorithm above to learn the parameters for our dataset.

[ ]:

# initialize fitting parameters. Recall that the shape of w is (n,)

initial_w = 20

initial_b = 5

# some gradient descent settings

iterations = 15000

alpha = 0.01

w,b,_,_ = gradient_descent(x_train ,y_train, initial_w, initial_b, compute_cost, compute_gradient, alpha, iterations)

print("w,b found by gradient descent:", w, b)

Expected Output:

w, b found by gradient descent | 1.16636235 -3.63029143940436 |

We will now use the final parameters from gradient descent to plot the linear fit.

Recall that we can get the prediction for a single example \(f(x^{(i)})= wx^{(i)}+b\).

To calculate the predictions on the entire dataset, we can loop through all the training examples and calculate the prediction for each example. This is shown in the code block below.

Assignment2: My solution

My Solution

#my solution: Dictate learning rate automatically,costrain parameter within boundry

import sys

#add modules from the path

sys.path.append("/home/amitk/my_web/Machine-Learning-Andrew-Ng/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/week2/C1W2A1")

import numpy as np

import matplotlib.pyplot as plt

from utils import *

import copy

import math

%matplotlib inline

#to show graphs inline

# load the dataset

#x_train, y_train = load_data()

x_train=np.linspace(5,25,100)

y_train= 3*x_train-8 + np.random.normal(0,1,len(x_train))

def model(x,theta):

w,b=theta

return w*x+b

def dmodel_w(x,theta):

w,b=theta

return x

def dmodel_b(x,theta):

w,b=theta

return 1.

def cost(x,theta,y):

cf= ( model(x,theta) - y)**2

return np.sum(cf)/2/np.shape(x_train)[0]

def dcost_w(x,theta,y):

return np.sum((model(x,theta)-y)*dmodel_w(x,theta))/len(x)

def dcost_b(x,theta,y):

return np.sum((model(x,theta)-y)*dmodel_b(x,theta))/len(x)

def compute_gradient(x,theta,y):

return dcost_w(x,theta,y),dcost_b(x,theta,y)

np.set_printoptions(precision=2)

def gradient_decent(x,y,theta,alpha,niter):

w,b=theta

if theta[1]>0: #constraining parameters

b=-theta[1]

cost_i=np.zeros(niter)

for i in np.arange(niter):

if i>1:

if np.abs((cost_i[i]-cost_i[i-1])/cost_i[i])<0.05:

alpha/=2

dcw,dcb= compute_gradient(x,theta,y)

w = w-alpha*dcw

b = b-alpha*dcb

theta=w,b

cost_i[i]=cost(x,theta,y)

if i>1:

if cost_i[i]>cost_i[i-1]:

alpha/=2

#print(cost_i[i],alpha)

#print(theta)

return cost_i,theta

niter=10000

Win=20

Bin=5

alpha=0.5

theta_in=Win,Bin

grad_dec_result,theta_f=gradient_decent(x_train,y_train,theta_in,alpha,niter)

wf,bf=theta_f

print(wf,bf,grad_dec_result[-1])

#print(compute_gradient(x_train,y_train,0.2,0.2))

ax=plt.subplot(121)

plt.plot(np.arange(niter),grad_dec_result,".")

plt.yscale("log")

plt.xlabel("No of steps")

plt.ylabel("Cost function")

plt.ylim(bottom=0.01)

#plt.xlim(0,100)

#plt.show()

m = x_train.shape[0]

predictedamit = np.zeros(m)

for i in range(m):

predictedamit[i] = wf * x_train[i] + bf

ax=plt.subplot(122)

# Plot the linear fit

#plt.plot(x_train, predicted, c = "b")

plt.plot(x_train, predictedamit, c = "g",label="Predcited model")

# Create a scatter plot of the data.

plt.scatter(x_train, y_train, marker='x', c='r')

# Set the title

plt.title("Model fit")

# Set the y-axis label

plt.ylabel('training data')

# Set the x-axis label

plt.xlabel('training input')

plt.legend()

plt.tight_layout()

How to write summary

import math

%matplotlib inline

plt.xlabel('Area of triangle')

See hints

import math

%matplotlib inline

plt.xlabel('Area of triangle')

Hint to calculate f_wb For scalars \(a\), \(b\) and \(c\) (x[i], w and b are all scalars), you can calculate the equation \(h = ab + c\) in code as h = a * b + c

More hints to calculate f You can compute f_wb as f_wb = w * x[i] + b

Hint to calculate cost You can calculate the square of a variable z as z**2

More hints to calculate cost You can compute cost as cost = (f_wb - y[i]) ** 2

[ ]:

m = x_train.shape[0]

predicted = np.zeros(m)

for i in range(m):

predicted[i] = w * x_train[i] + b

We will now plot the predicted values to see the linear fit.

[ ]:

# Plot the linear fit

plt.plot(x_train, predicted, c = "b")

#plt.plot(x_train, predictedamit, c = "g")

# Create a scatter plot of the data.

plt.scatter(x_train, y_train, marker='x', c='r')

# Set the title

plt.title("Profits vs. Population per city")

# Set the y-axis label

plt.ylabel('Profit in $10,000')

# Set the x-axis label

plt.xlabel('Population of City in 10,000s')

Your final values of \(w,b\) can also be used to make predictions on profits. Let’s predict what the profit would be in areas of 35,000 and 70,000 people.

The model takes in population of a city in 10,000s as input.

Therefore, 35,000 people can be translated into an input to the model as

np.array([3.5])Similarly, 70,000 people can be translated into an input to the model as

np.array([7.])

[ ]:

predict1 = 3.5 * w + b

print('For population = 35,000, we predict a profit of $%.2f' % (predict1*10000))

predict2 = 7.0 * w + b

print('For population = 70,000, we predict a profit of $%.2f' % (predict2*10000))

Expected Output:

For population = 35,000, we predict a profit of | $4519.77 |

For population = 70,000, we predict a profit of | $45342.45 |

Module - 3

Optional Lab W3

Optional Lab - 3.1: Classification

In this lab, you will contrast regression and classification.

[ ]:

import os,sys

proj_path=f"{os.environ['HOME']}/my_web/Machine-Learning-Andrew-Ng"

module3=f"{proj_path}/source/source_files/Supervised_Machine_Learning_Regression_and_Classification/"

os.chdir(module3)

[ ]:

import matplotlib.pyplot as plt

plt.style.use("week3/OptionalLabs/deeplearning.mplstyle")

sys.path.append(f"{module3}/week3/OptionalLabs")

from lab_utils_common import dlc, plot_data

from plt_one_addpt_onclick import plt_one_addpt_onclick

import numpy as np

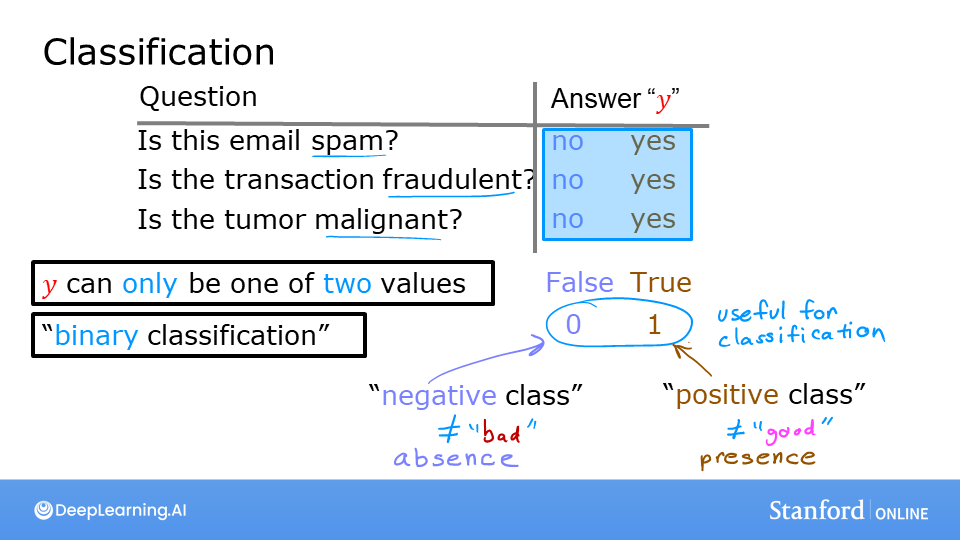

Classification Problems

Examples of classification problems are things like: identifying email as Spam or Not Spam or determining if a tumor is malignant or benign. In particular, these are examples of binary classification where there are two possible outcomes. Outcomes can be described in pairs of ‘positive’/’negative’ such as ‘yes’/’no, ‘true’/’false’ or ‘1’/’0’.

Examples of classification problems are things like: identifying email as Spam or Not Spam or determining if a tumor is malignant or benign. In particular, these are examples of binary classification where there are two possible outcomes. Outcomes can be described in pairs of ‘positive’/’negative’ such as ‘yes’/’no, ‘true’/’false’ or ‘1’/’0’.

Plots of classification data sets often use symbols to indicate the outcome of an example. In the plots below, ‘X’ is used to represent the positive values while ‘O’ represents negative outcomes.

[ ]:

x_train = np.array([0., 1, 2, 3, 4, 5])

y_train = np.array([0, 0, 0, 1, 1, 1])

X_train2 = np.array([[0.5, 1.5], [1,1], [1.5, 0.5], [3, 0.5], [2, 2], [1, 2.5]])

y_train2 = np.array([0, 0, 0, 1, 1, 1])

[ ]:

pos = y_train == 1

neg = y_train == 0

fig,ax = plt.subplots(1,2,figsize=(8,3))

#plot 1, single variable

ax[0].scatter(x_train[pos], y_train[pos], marker='x', s=80, c = 'red', label="y=1")

ax[0].scatter(x_train[neg], y_train[neg], marker='o', s=100, label="y=0", facecolors='none', edgecolors=dlc["dlblue"],lw=3)

ax[0].set_ylim(-0.08,1.1)

ax[0].set_ylabel('y', fontsize=12)

ax[0].set_xlabel('x', fontsize=12)

ax[0].set_title('one variable plot')

ax[0].legend()

#plot 2, two variables

plot_data(X_train2, y_train2, ax[1])

ax[1].axis([0, 4, 0, 4])

ax[1].set_ylabel('$x_1$', fontsize=12)

ax[1].set_xlabel('$x_0$', fontsize=12)

ax[1].set_title('two variable plot')

ax[1].legend()

plt.tight_layout()

plt.show()

Note in the plots above: - In the single variable plot, positive results are shown both a red ‘X’s and as y=1. Negative results are blue ‘O’s and are located at y=0. - Recall in the case of linear regression, y would not have been limited to two values but could have been any value. - In the two-variable plot, the y axis is not available. Positive results are shown as red ‘X’s, while negative results use the blue ‘O’ symbol. - Recall in the case of linear regression with multiple variables, y would not have been limited to two values and a similar plot would have been three-dimensional.

Linear Regression approach

In the previous week, you applied linear regression to build a prediction model. Let’s try that approach here using the simple example that was described in the lecture. The model will predict if a tumor is benign or malignant based on tumor size. Try the following: - Click on ‘Run Linear Regression’ to find the best linear regression model for the given data. - Note the resulting linear model does not match the data well. One option to improve the results is to apply a threshold. - Tick the box on the ‘Toggle 0.5 threshold’ to show the predictions if a threshold is applied. - These predictions look good, the predictions match the data - Important: Now, add further ‘malignant’ data points on the far right, in the large tumor size range (near 10), and re-run linear regression. - Now, the model predicts the larger tumor, but data point at x=3 is being incorrectly predicted! - to clear/renew the plot, rerun the cell containing the plot command.

[ ]:

w_in = np.zeros((1))

b_in = 0

plt.close('all')

addpt = plt_one_addpt_onclick( x_train,y_train, w_in, b_in, logistic=False)

The example above demonstrates that the linear model is insufficient to model categorical data. The model can be extended as described in the following lab.

In this lab you: - explored categorical data sets and plotting - determined that linear regression was insufficient for a classification problem.

Optional Lab - 3.2: Logistic Regression

In this ungraded lab, you will - explore the sigmoid function (also known as the logistic function) - explore logistic regression; which uses the sigmoid function

[ ]:

import numpy as np

%matplotlib widget

import matplotlib.pyplot as plt

from plt_one_addpt_onclick import plt_one_addpt_onclick

from lab_utils_common import draw_vthresh

plt.style.use('week3/OptionalLabs/deeplearning.mplstyle')

Sigmoid or Logistic Function

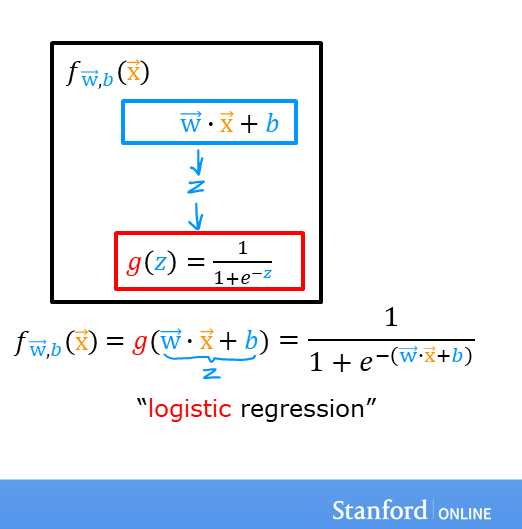

|6ad9867be1614455a28dddf811316f6f|As discussed in the lecture videos, for a classification task, we can start by using our linear regression model, \(f_{\mathbf{w},b}(\mathbf{x}^{(i)}) = \mathbf{w} \cdot \mathbf{x}^{(i)} + b\), to predict \(y\) given \(x\). - However, we would like the predictions of our classification model to be between 0 and 1 since our output variable \(y\) is either 0 or 1. - This can be accomplished by using a “sigmoid function” which maps all input values to values between 0 and 1.

Let’s implement the sigmoid function and see this for ourselves.

Formula for Sigmoid function

The formula for a sigmoid function is as follows -

\(g(z) = \frac{1}{1+e^{-z}}\tag{1}\)

In the case of logistic regression, z (the input to the sigmoid function), is the output of a linear regression model. - In the case of a single example, \(z\) is scalar. - in the case of multiple examples, \(z\) may be a vector consisting of \(m\) values, one for each example. - The implementation of the sigmoid function should cover both of these potential input formats. Let’s implement this in Python.

NumPy has a function called `exp() <https://numpy.org/doc/stable/reference/generated/numpy.exp.html>`__, which offers a convenient way to calculate the exponential ( \(e^{z}\)) of all elements in the input array (z).

It also works with a single number as an input, as shown below.

[ ]:

# Input is an array.

input_array = np.array([1,2,3])

exp_array = np.exp(input_array)

print("Input to exp:", input_array)

print("Output of exp:", exp_array)

# Input is a single number

input_val = 1

exp_val = np.exp(input_val)

print("Input to exp:", input_val)

print("Output of exp:", exp_val)

The sigmoid function is implemented in python as shown in the cell below.

[ ]:

def sigmoid(z):

"""

Compute the sigmoid of z

Args:

z (ndarray): A scalar, numpy array of any size.

Returns:

g (ndarray): sigmoid(z), with the same shape as z

"""

g = 1/(1+np.exp(-z))

return g

Let’s see what the output of this function is for various value of z

[ ]:

# Generate an array of evenly spaced values between -10 and 10

z_tmp = np.arange(-10,11)

# Use the function implemented above to get the sigmoid values

y = sigmoid(z_tmp)

# Code for pretty printing the two arrays next to each other

np.set_printoptions(precision=3)

print("Input (z), Output (sigmoid(z))")

print(np.c_[z_tmp, y])

The values in the left column are z, and the values in the right column are sigmoid(z). As you can see, the input values to the sigmoid range from -10 to 10, and the output values range from 0 to 1.

Now, let’s try to plot this function using the matplotlib library.

[ ]:

# Plot z vs sigmoid(z)

fig,ax = plt.subplots(1,1,figsize=(5,3))

ax.plot(z_tmp, y, c="b")

ax.set_title("Sigmoid function")

ax.set_ylabel('sigmoid(z)')

ax.set_xlabel('z')

draw_vthresh(ax,0)

As you can see, the sigmoid function approaches 0 as z goes to large negative values and approaches 1 as z goes to large positive values.

Logistic Regression

A logistic regression model applies the sigmoid to the familiar linear regression model as shown below:

A logistic regression model applies the sigmoid to the familiar linear regression model as shown below:

where

\(g(z) = \frac{1}{1+e^{-z}}\tag{3}\)

[ ]:

x_train = np.array([0., 1, 2, 3, 4, 5])

y_train = np.array([0, 0, 0, 1, 1, 1])

w_in = np.zeros((1))

b_in = 0

Try the following steps: - Click on ‘Run Logistic Regression’ to find the best logistic regression model for the given training data - Note the resulting model fits the data quite well. - Note, the orange line is ‘\(z\)’ or \(\mathbf{w} \cdot \mathbf{x}^{(i)} + b\) above. It does not match the line in a linear regression model. Further improve these results by applying a threshold. - Tick the box on the ‘Toggle 0.5 threshold’ to show the predictions if a threshold is applied. - These predictions look good. The predictions match the data - Now, add further data points in the large tumor size range (near 10), and re-run logistic regression. - unlike the linear regression model, this model continues to make correct predictions

[ ]:

plt.close('all')

addpt = plt_one_addpt_onclick( x_train,y_train, w_in, b_in, logistic=True)

You have explored the use of the sigmoid function in logistic regression.

Optional Lab - 3.3: Logistic Regression, Decision Boundary

Goals

In this lab, you will: - Plot the decision boundary for a logistic regression model. This will give you a better sense of what the model is predicting.

[ ]:

import numpy as np

%matplotlib widget

import matplotlib.pyplot as plt

from lab_utils_common import plot_data, sigmoid, draw_vthresh

#plt.style.use('week3/OptionalLabs/deeplearning.mplstyle')

Dataset

Let’s suppose you have following training dataset - The input variable X is a numpy array which has 6 training examples, each with two features - The output variable y is also a numpy array with 6 examples, and y is either 0 or 1

[ ]:

X = np.array([[0.5, 1.5], [1,1], [1.5, 0.5], [3, 0.5], [2, 2], [1, 2.5]])

y = np.array([0, 0, 0, 1, 1, 1]).reshape(-1,1)

Plot data

Let’s use a helper function to plot this data. The data points with label \(y=1\) are shown as red crosses, while the data points with label \(y=0\) are shown as blue circles.

[ ]:

fig,ax = plt.subplots(1,1,figsize=(4,4))

plot_data(X, y, ax)

ax.axis([0, 4, 0, 3.5])

ax.set_ylabel('$x_1$')

ax.set_xlabel('$x_0$')

plt.show()

Logistic regression model

Suppose you’d like to train a logistic regression model on this data which has the form

\(f(x) = g(w_0x_0+w_1x_1 + b)\)

where \(g(z) = \frac{1}{1+e^{-z}}\), which is the sigmoid function

Let’s say that you trained the model and get the parameters as \(b = -3, w_0 = 1, w_1 = 1\). That is,

\(f(x) = g(x_0+x_1-3)\)

(You’ll learn how to fit these parameters to the data further in the course)

Let’s try to understand what this trained model is predicting by plotting its decision boundary

Refresher on logistic regression and decision boundary

Recall that for logistic regression, the model is represented as

\[f_{\mathbf{w},b}(\mathbf{x}^{(i)}) = g(\mathbf{w} \cdot \mathbf{x}^{(i)} + b) \tag{1}\]where \(g(z)\) is known as the sigmoid function and it maps all input values to values between 0 and 1:

\(g(z) = \frac{1}{1+e^{-z}}\tag{2}\) and \(\mathbf{w} \cdot \mathbf{x}\) is the vector dot product:

\[\mathbf{w} \cdot \mathbf{x} = w_0 x_0 + w_1 x_1\]We interpret the output of the model (\(f_{\mathbf{w},b}(x)\)) as the probability that \(y=1\) given \(\mathbf{x}\) and parameterized by \(\mathbf{w}\) and \(b\).

Therefore, to get a final prediction (\(y=0\) or \(y=1\)) from the logistic regression model, we can use the following heuristic -

if \(f_{\mathbf{w},b}(x) >= 0.5\), predict \(y=1\)

if \(f_{\mathbf{w},b}(x) < 0.5\), predict \(y=0\)

Let’s plot the sigmoid function to see where \(g(z) >= 0.5\)

[ ]:

# Plot sigmoid(z) over a range of values from -10 to 10

z = np.arange(-10,11)

fig,ax = plt.subplots(1,1,figsize=(5,3))

# Plot z vs sigmoid(z)

ax.plot(z, sigmoid(z), c="b")

ax.set_title("Sigmoid function")

ax.set_ylabel('sigmoid(z)')

ax.set_xlabel('z')

draw_vthresh(ax,0)

As you can see, \(g(z) >= 0.5\) for \(z >=0\)

For a logistic regression model, \(z = \mathbf{w} \cdot \mathbf{x} + b\). Therefore,

if \(\mathbf{w} \cdot \mathbf{x} + b >= 0\), the model predicts \(y=1\)

if \(\mathbf{w} \cdot \mathbf{x} + b < 0\), the model predicts \(y=0\)

Plotting decision boundary

Now, let’s go back to our example to understand how the logistic regression model is making predictions.

Our logistic regression model has the form

\(f(\mathbf{x}) = g(-3 + x_0+x_1)\)

From what you’ve learnt above, you can see that this model predicts \(y=1\) if \(-3 + x_0+x_1 >= 0\)

Let’s see what this looks like graphically. We’ll start by plotting \(-3 + x_0+x_1 = 0\), which is equivalent to \(x_1 = 3 - x_0\).

[ ]:

#plotting some decision boundry

import numpy as np

import matplotlib.pyplot as plt

x0 = np.arange(0,2.1,0.01)

x1 = np.sqrt(4 - x0**2)

fig,ax = plt.subplots(1,1,figsize=(5,4))

# Plot the decision boundary

ax.plot(x0,x1, c="b")

ax.axis([0, 4, 0, 4])

# Fill the region below the line

ax.fill_between(x0,x1, alpha=0.2)

# Plot the original data

ax.set_ylabel(r'$x_1$')

ax.set_xlabel(r'$x_0$')

plt.show()

[ ]:

# Choose values between 0 and 6

x0 = np.arange(0,6)

x1 = 3 - x0

fig,ax = plt.subplots(1,1,figsize=(5,4))

# Plot the decision boundary

ax.plot(x0,x1, c="b")

ax.axis([0, 4, 0, 3.5])

# Fill the region below the line

ax.fill_between(x0,x1, alpha=0.2)

# Plot the original data

plot_data(X,y,ax)

ax.set_ylabel(r'$x_1$')

ax.set_xlabel(r'$x_0$')

plt.show()

In the plot above, the blue line represents the line \(x_0 + x_1 - 3 = 0\) and it should intersect the x1 axis at 3 (if we set \(x_1\) = 3, \(x_0\) = 0) and the x0 axis at 3 (if we set \(x_1\) = 0, \(x_0\) = 3).

The shaded region represents \(-3 + x_0+x_1 < 0\). The region above the line is \(-3 + x_0+x_1 > 0\).

Any point in the shaded region (under the line) is classified as \(y=0\). Any point on or above the line is classified as \(y=1\). This line is known as the “decision boundary”.

As we’ve seen in the lectures, by using higher order polynomial terms (eg: \(f(x) = g( x_0^2 + x_1 -1)\), we can come up with more complex non-linear boundaries.

You have explored the decision boundary in the context of logistic regression.

Optional Lab - 3.4: Logistic Regression, Logistic Loss

In this ungraded lab, you will: - explore the reason the squared error loss is not appropriate for logistic regression - explore the logistic loss function

[ ]:

[ ]:

import numpy as np

%matplotlib widget

import matplotlib.pyplot as plt

from plt_logistic_loss import plt_logistic_cost, plt_two_logistic_loss_curves, plt_simple_example

from plt_logistic_loss import soup_bowl, plt_logistic_squared_error

plt.style.use('week3/OptionalLabs/deeplearning.mplstyle')

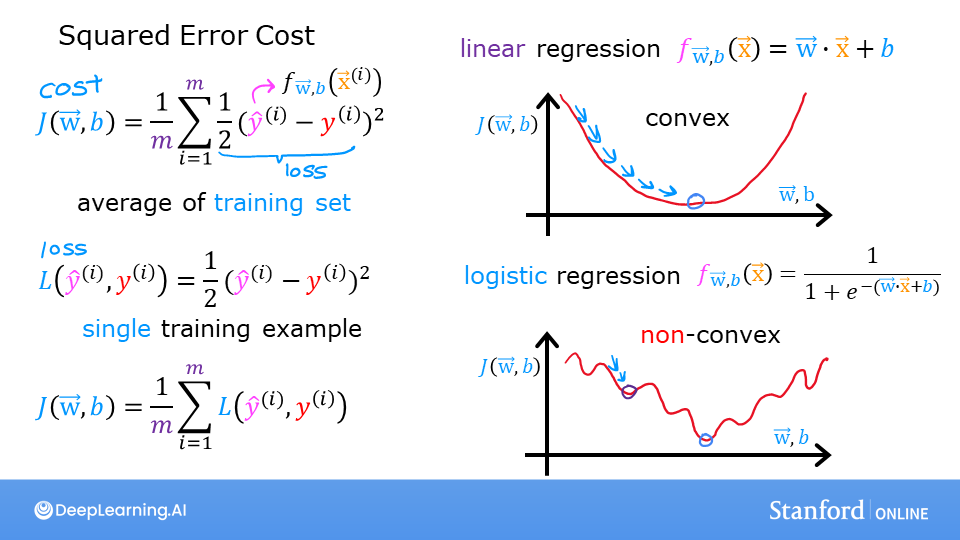

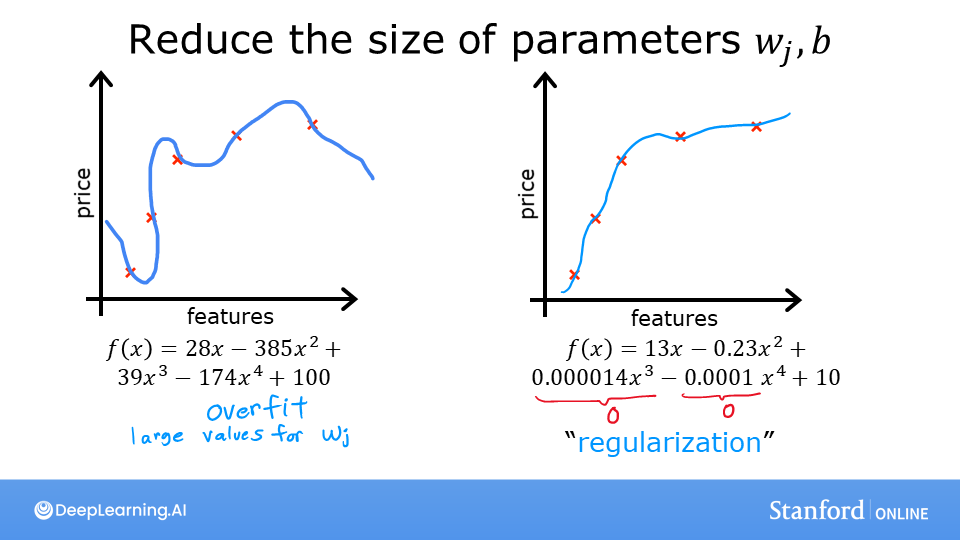

Squared error for logistic regression?

Recall for Linear Regression we have used the squared error cost function: The equation for the squared error cost with one variable is:

Recall for Linear Regression we have used the squared error cost function: The equation for the squared error cost with one variable is:

where

Recall, the squared error cost had the nice property that following the derivative of the cost leads to the minimum.

[ ]:

soup_bowl()

This cost function worked well for linear regression, it is natural to consider it for logistic regression as well. However, as the slide above points out, \(f_{wb}(x)\) now has a non-linear component, the sigmoid function: \(f_{w,b}(x^{(i)}) = sigmoid(wx^{(i)} + b )\). Let’s try a squared error cost on the example from an earlier lab, now including the sigmoid.

Here is our training data:

[ ]:

x_train = np.array([0., 1, 2, 3, 4, 5],dtype=np.longdouble)

y_train = np.array([0, 0, 0, 1, 1, 1],dtype=np.longdouble)

plt_simple_example(x_train, y_train)

Now, let’s get a surface plot of the cost using a squared error cost:

where

Plot logistic squared error

[ ]:

import numpy as np

import matplotlib.pyplot as plt

from matplotlib import cm

x_train = np.array([0., 1, 2, 3, 4, 5],dtype=np.longdouble)

y_train = np.array([0, 0, 0, 1, 1, 1],dtype=np.longdouble)

wx,by=np.meshgrid(np.linspace(-6,12,100),np.linspace(10,-20,100))

def logistic_model(x,w,b):

return 1/(1+np.exp(-(w*x+b)))

def cost_fn_logistic(x,w,b,y):

return np.sum((logistic_model(x,w,b)-y)**2)/2/len(x)

cost_f=np.zeros(wx.shape)

for wi in range(wx.shape[0]):

for wj in range(wx.shape[1]):

w,b=wx[wi,wj],by[wi,wj]

#print(cost_fn_logistic(x_train,w,b,y_train))

cost_f[wi,wj]=cost_fn_logistic(x_train,w,b,y_train)

fig = plt.figure()

fig.canvas.toolbar_visible = False

fig.canvas.header_visible = False

fig.canvas.footer_visible = False

ax = fig.add_subplot(1, 1, 1, projection='3d')

ax.plot_surface(wx, by, cost_f, alpha=0.6,cmap=cm.coolwarm)

[ ]:

plt.close('all')

plt_logistic_squared_error(x_train,y_train)

plt.show()

While this produces a pretty interesting plot, the surface above not nearly as smooth as the ‘soup bowl’ from linear regression!

Logistic regression requires a cost function more suitable to its non-linear nature. This starts with a Loss function. This is described below.

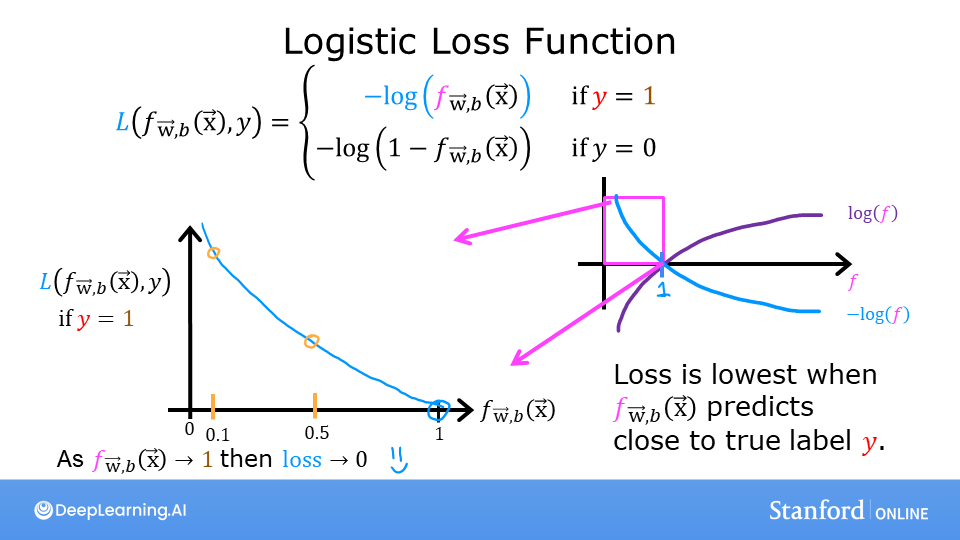

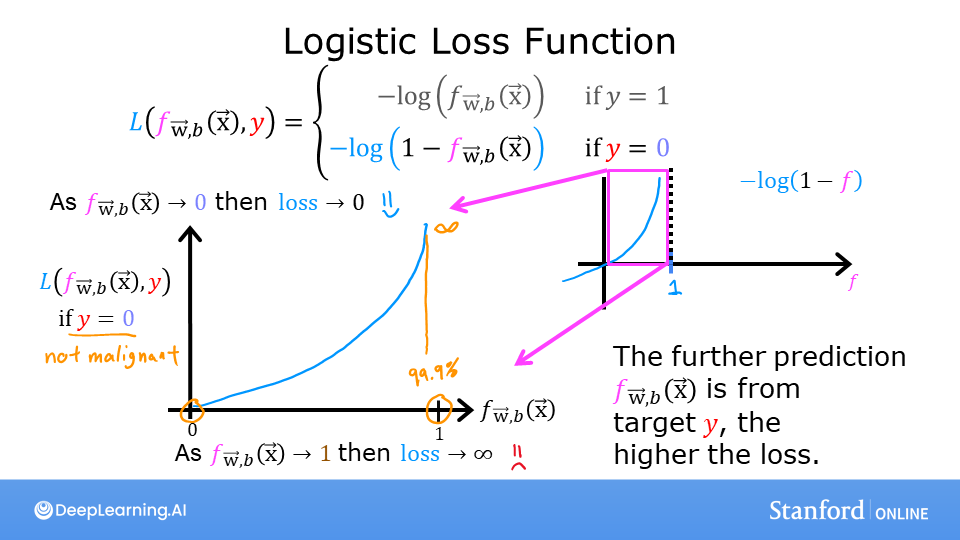

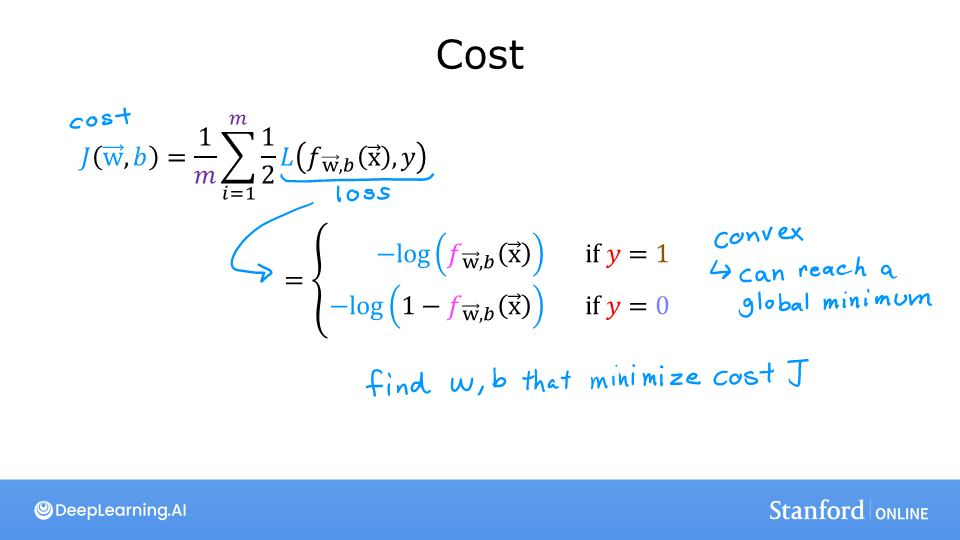

Logistic Loss Function

Logistic Regression uses a loss function more suited to the task of categorization where the target is 0 or 1 rather than any number.

Definition Note: In this course, these definitions are used:Loss is a measure of the difference of a single example to its target value while theCost is a measure of the losses over the training set

This is defined: * \(loss(f_{\mathbf{w},b}(\mathbf{x}^{(i)}), y^{(i)})\) is the cost for a single data point, which is:

- :nbsphinx-math:`begin{equation}

- loss(f_{mathbf{w},b}(mathbf{x}^{(i)}), y^{(i)}) = begin{cases}

logleft(f_{mathbf{w},b}left( mathbf{x}^{(i)} right) right) & text{if $y^{(i)}=1$}\

log left( 1 - f_{mathbf{w},b}left( mathbf{x}^{(i)} right) right) & text{if $y^{(i)}=0$}

end{cases}

end{equation}`

\(f_{\mathbf{w},b}(\mathbf{x}^{(i)})\) is the model’s prediction, while \(y^{(i)}\) is the target value.

\(f_{\mathbf{w},b}(\mathbf{x}^{(i)}) = g(\mathbf{w} \cdot\mathbf{x}^{(i)}+b)\) where function \(g\) is the sigmoid function.

The defining feature of this loss function is the fact that it uses two separate curves. One for the case when the target is zero or (\(y=0\)) and another for when the target is one (\(y=1\)). Combined, these curves provide the behavior useful for a loss function, namely, being zero when the prediction matches the target and rapidly increasing in value as the prediction differs from the target. Consider the curves below:

[ ]:

plt_two_logistic_loss_curves()

Combined, the curves are similar to the quadratic curve of the squared error loss. Note, the x-axis is \(f_{\mathbf{w},b}\) which is the output of a sigmoid. The sigmoid output is strictly between 0 and 1.

The loss function above can be rewritten to be easier to implement.

\[\begin{split}\begin{align} loss(f_{\mathbf{w},b}(\mathbf{x}^{(i)}), 0) &= (-(0) \log\left(f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) - \left( 1 - 0\right) \log \left( 1 - f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) \\ &= -\log \left( 1 - f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) \end{align}\end{split}\]and when $ y^{(i)} = 1$, the right-hand term is eliminated:

\[\begin{split}\begin{align} loss(f_{\mathbf{w},b}(\mathbf{x}^{(i)}), 1) &= (-(1) \log\left(f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) - \left( 1 - 1\right) \log \left( 1 - f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right)\\ &= -\log\left(f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) \end{align}\end{split}\]

OK, with this new logistic loss function, a cost function can be produced that incorporates the loss from all the examples. This will be the topic of the next lab. For now, let’s take a look at the cost vs parameters curve for the simple example we considered above:

[ ]:

plt.close('all')

cst = plt_logistic_cost(x_train,y_train)

This curve is well suited to gradient descent! It does not have plateaus, local minima, or discontinuities. Note, it is not a bowl as in the case of squared error. Both the cost and the log of the cost are plotted to illuminate the fact that the curve, when the cost is small, has a slope and continues to decline. Reminder: you can rotate the above plots using your mouse.

You have: - determined a squared error loss function is not suitable for classification tasks - developed and examined the logistic loss function which is suitable for classification tasks.

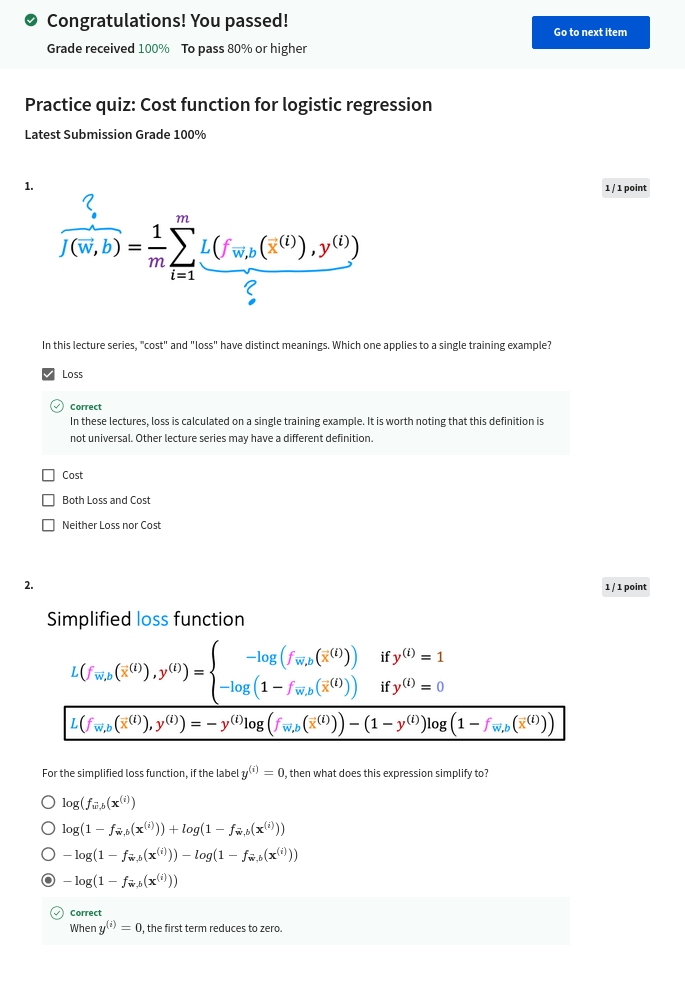

Optional Lab - 3.5: Cost Function for Logistic Regression

Goals

In this lab, you will: - examine the implementation and utilize the cost function for logistic regression.

[ ]:

import numpy as np

%matplotlib widget

import matplotlib.pyplot as plt

from lab_utils_common import plot_data, sigmoid, dlc

plt.style.use('week3/OptionalLabs/deeplearning.mplstyle')

Dataset

Let’s start with the same dataset as was used in the decision boundary lab.

[ ]:

X_train = np.array([[0.5, 1.5], [1,1], [1.5, 0.5], [3, 0.5], [2, 2], [1, 2.5]]) #(m,n)

y_train = np.array([0, 0, 0, 1, 1, 1]) #(m,)

We will use a helper function to plot this data. The data points with label \(y=1\) are shown as red crosses, while the data points with label \(y=0\) are shown as blue circles.

[ ]:

fig,ax = plt.subplots(1,1,figsize=(4,4))

plot_data(X_train, y_train, ax)

# Set both axes to be from 0-4

ax.axis([0, 4, 0, 3.5])

ax.set_ylabel('$x_1$', fontsize=12)

ax.set_xlabel('$x_0$', fontsize=12)

plt.show()

Cost function

In a previous lab, you developed the logistic loss function. Recall, loss is defined to apply to one example. Here you combine the losses to form the cost, which includes all the examples.

Recall that for logistic regression, the cost function is of the form

where * \(loss(f_{\mathbf{w},b}(\mathbf{x}^{(i)}), y^{(i)})\) is the cost for a single data point, which is:

$$loss(f_{\mathbf{w},b}(\mathbf{x}^{(i)}), y^{(i)}) = -y^{(i)} \log\left(f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) - \left( 1 - y^{(i)}\right) \log \left( 1 - f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) \tag{2}$$

where m is the number of training examples in the data set and:

\[\begin{split}\begin{align} f_{\mathbf{w},b}(\mathbf{x^{(i)}}) &= g(z^{(i)})\tag{3} \\ z^{(i)} &= \mathbf{w} \cdot \mathbf{x}^{(i)}+ b\tag{4} \\ g(z^{(i)}) &= \frac{1}{1+e^{-z^{(i)}}}\tag{5} \end{align}\end{split}\]

Code Description

The algorithm for compute_cost_logistic loops over all the examples calculating the loss for each example and accumulating the total.

Note that the variables X and y are not scalar values but matrices of shape (\(m, n\)) and (\(𝑚\),) respectively, where \(𝑛\) is the number of features and \(𝑚\) is the number of training examples.

[ ]:

def compute_cost_logistic(X, y, w, b):

"""

Computes cost

Args:

X (ndarray (m,n)): Data, m examples with n features

y (ndarray (m,)) : target values

w (ndarray (n,)) : model parameters

b (scalar) : model parameter

Returns:

cost (scalar): cost

"""

m = X.shape[0]

cost = 0.0

for i in range(m):

z_i = np.dot(X[i],w) + b

f_wb_i = sigmoid(z_i)

cost += -y[i]*np.log(f_wb_i) - (1-y[i])*np.log(1-f_wb_i)

cost = cost / m

return cost

Check the implementation of the cost function using the cell below.

[ ]:

w_tmp = np.array([1,1])

b_tmp = -3

print(compute_cost_logistic(X_train, y_train, w_tmp, b_tmp))

Expected output: 0.3668667864055175

[ ]:

import numpy as np

import matplotlib.pyplot as plt

from matplotlib import cm

wx,by=np.meshgrid(np.linspace(-6,12,100),np.linspace(10,-20,100))

def logistic_model(x,w,b):

return np.array([ (1/(1+np.exp(-(np.dot(w,i)+b)))) for i in x])

def cost_fn_logistic(x,w,b,y):

return np.sum(-y*np.log(logistic_model(x,w,b))-(1-y)*np.log(1-logistic_model(x,w,b)))/len(x)

cost_fn_logistic(X_train,np.array([1,1]),-4,y_train)

Example

Now, let’s see what the cost function output is for a different value of \(w\).

In a previous lab, you plotted the decision boundary for \(b = -3, w_0 = 1, w_1 = 1\). That is, you had

b = -3, w = np.array([1,1]).Let’s say you want to see if \(b = -4, w_0 = 1, w_1 = 1\), or

b = -4, w = np.array([1,1])provides a better model.

Let’s first plot the decision boundary for these two different \(b\) values to see which one fits the data better.

For \(b = -3, w_0 = 1, w_1 = 1\), we’ll plot \(-3 + x_0+x_1 = 0\) (shown in blue)

For \(b = -4, w_0 = 1, w_1 = 1\), we’ll plot \(-4 + x_0+x_1 = 0\) (shown in magenta)

[ ]:

import matplotlib.pyplot as plt

# Choose values between 0 and 6

x0 = np.arange(0,6)

# Plot the two decision boundaries

x1 = 3 - x0

x1_other = 4 - x0

fig,ax = plt.subplots(1, 1, figsize=(4,4))

# Plot the decision boundary

ax.plot(x0,x1, c=dlc["dlblue"], label="$b$=-3")

ax.plot(x0,x1_other, c=dlc["dlmagenta"], label="$b$=-4")

ax.axis([0, 4, 0, 4])

# Plot the original data

plot_data(X_train,y_train,ax)

ax.axis([0, 4, 0, 4])

ax.set_ylabel('$x_1$', fontsize=12)

ax.set_xlabel('$x_0$', fontsize=12)

plt.legend(loc="upper right")

plt.title("Decision Boundary")

plt.show()

You can see from this plot that b = -4, w = np.array([1,1]) is a worse model for the training data. Let’s see if the cost function implementation reflects this.

[ ]:

w_array1 = np.array([1,1])

b_1 = -3

w_array2 = np.array([1,1])

b_2 = -4

print("Cost for b = -3 : ", compute_cost_logistic(X_train, y_train, w_array1, b_1))

print("Cost for b = -4 : ", compute_cost_logistic(X_train, y_train, w_array2, b_2))

Expected output

Cost for b = -3 : 0.3668667864055175

Cost for b = -4 : 0.5036808636748461

You can see the cost function behaves as expected and the cost for b = -4, w = np.array([1,1]) is indeed higher than the cost for b = -3, w = np.array([1,1])

In this lab you examined and utilized the cost function for logistic regression.

Optional Lab - 3.6: Gradient Descent for Logistic Regression

Goals

In this lab, you will: - update gradient descent for logistic regression. - explore gradient descent on a familiar data set

[ ]:

import copy, math

import numpy as np

%matplotlib widget

import matplotlib.pyplot as plt

from lab_utils_common import dlc, plot_data, plt_tumor_data, sigmoid, compute_cost_logistic

from plt_quad_logistic import plt_quad_logistic, plt_prob

plt.style.use('week3/OptionalLabs/deeplearning.mplstyle')

Data set

Let’s start with the same two feature data set used in the decision boundary lab.

[ ]:

X_train = np.array([[0.5, 1.5], [1,1], [1.5, 0.5], [3, 0.5], [2, 2], [1, 2.5]])

y_train = np.array([0, 0, 0, 1, 1, 1])

As before, we’ll use a helper function to plot this data. The data points with label \(y=1\) are shown as red crosses, while the data points with label \(y=0\) are shown as blue circles.

[ ]:

fig,ax = plt.subplots(1,1,figsize=(4,4))

plot_data(X_train, y_train, ax)

ax.axis([0, 4, 0, 3.5])

ax.set_ylabel('$x_1$', fontsize=12)

ax.set_xlabel('$x_0$', fontsize=12)

plt.show()

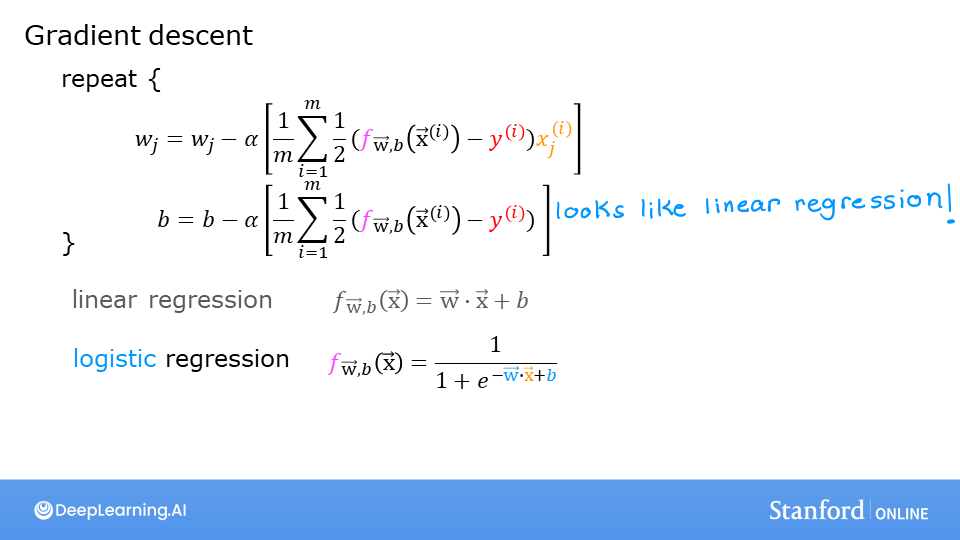

Logistic Gradient Descent

Recall the gradient descent algorithm utilizes the gradient calculation:

Where each iteration performs simultaneous updates on \(w_j\) for all \(j\), where

m is the number of training examples in the data set

\(f_{\mathbf{w},b}(x^{(i)})\) is the model’s prediction, while \(y^{(i)}\) is the target

For a logistic regression model \(z = \mathbf{w} \cdot \mathbf{x} + b\) \(f_{\mathbf{w},b}(x) = g(z)\) where \(g(z)\) is the sigmoid function: \(g(z) = \frac{1}{1+e^{-z}}\)

Gradient Descent Implementation

The gradient descent algorithm implementation has two components: - The loop implementing equation (1) above. This is gradient_descent below and is generally provided to you in optional and practice labs. - The calculation of the current gradient, equations (2,3) above. This is compute_gradient_logistic below. You will be asked to implement this week’s practice lab.

Calculating the Gradient, Code Description

dj_dw and dj_db - for each example - calculate the error for that example \(g(\mathbf{w} \cdot \mathbf{x}^{(i)} + b) - \mathbf{y}^{(i)}\) - for each input value \(x_{j}^{(i)}\) in this example,dj_dw. (equation 2 above) - add the error to dj_db (equation 3 above)divide

dj_dbanddj_dwby total number of examples (m)note that \(\mathbf{x}^{(i)}\) in numpy

X[i,:]orX[i]and \(x_{j}^{(i)}\) isX[i,j]

[ ]:

My solution

Logistic regression(1 variable)

import numpy as np

import matplotlib.pyplot as plt

from matplotlib import cm

x_train = np.array([0., 1, 2, 3, 4, 5],dtype=np.longdouble)

y_train = np.array([0, 0, 0, 1, 1, 1],dtype=np.longdouble)

#wx,by=np.meshgrid(np.linspace(-6,12,100),np.linspace(10,-20,100))

def model(x,theta):

w,b=theta

sigmoid=np.zeros(len(x))

for i in range(len(x)):

if np.isscalar(w):

w=np.array(w)

if w.shape!=x[i].shape:

print("Shape of W and X dosn't match")

sys.exit()

sigmoid[i]=1/(1+np.exp(-(np.dot(w,x[i])+b)))

return sigmoid

def dmodel_w(x,theta):

w,b=theta

return x

def dmodel_b(x,theta):

w,b=theta

return 1.

def cost(x,theta,y):

w,b=theta

cf= -y*np.log(model(x,theta))-(1-y)*np.log(1-model(x,theta))

return np.sum(cf)/np.shape(x)[0]

def dcost_w(x,theta,y):

return np.sum((model(x,theta)-y)*dmodel_w(x,theta))/len(x)

def dcost_b(x,theta,y):

return np.sum((model(x,theta)-y)*dmodel_b(x,theta))/len(x)

def compute_gradient(x,theta,y):

return dcost_w(x,theta,y),dcost_b(x,theta,y)

np.set_printoptions(precision=2)

def gradient_decent(x,y,theta,alpha,niter):

w,b=theta

if theta[1]>0: #constraining parameters

b=-theta[1]

cost_i=np.zeros(niter)

for i in np.arange(niter):

if i>1:

if np.abs((cost_i[i]-cost_i[i-1])/cost_i[i])<0.05:

alpha/=2

dcw,dcb= compute_gradient(x,theta,y)

w = w-alpha*dcw

b = b-alpha*dcb

theta=w,b

cost_i[i]=cost(x,theta,y)

if i>1:

if cost_i[i]>cost_i[i-1]:

alpha/=2

#print(cost_i[i],alpha)

#print(theta)

return cost_i,theta

niter=1000

Win=20

Bin=5

alpha=0.5

theta_in=Win,Bin

grad_dec_result,theta_f=gradient_decent(x_train,y_train,theta_in,alpha,niter)

wf,bf=theta_f

print(wf,bf,grad_dec_result[-1])

plt.figure(figsize=(8,4))

ax=plt.subplot(121)

plt.plot(np.arange(niter),grad_dec_result,".")

plt.yscale("log")

plt.xlabel("No of steps")

plt.ylabel("Cost function")

plt.ylim(bottom=0.01)

ax=plt.subplot(1,2,2)

plt.plot(x_train, model(x_train,theta_f), c = "g",label="Predcited model")

plt.scatter(x_train, y_train, marker='x', c='r')

# Set the title

plt.title("Model fit")

# Set the y-axis label

plt.ylabel('training data')

# Set the x-axis label

plt.xlabel('training input')

plt.legend()

plt.tight_layout()

Logistic regression(2 variables)

import numpy as np,sys

import matplotlib.pyplot as plt

from matplotlib import cm

x_train = np.array([[0.5, 1.5], [1,1], [1.5, 0.5], [3, 0.5], [2, 2],[0.5,0.5],[2.7,1.5], [1, 2.5]])

y_train = np.array([1, 1, 1, 1, 1,0,1, 1],dtype=np.longdouble)

#wx,by=np.meshgrid(np.linspace(-6,12,100),np.linspace(10,-20,100))

def model(x,theta):

w,b=theta

if np.isscalar(x):

x=np.array(x)

if np.isscalar(w):

w=np.array([w])

elif isinstance(w,tuple):

w=np.array(w)

sigmoid=np.zeros(len(x))

for i in range(len(x)):

if w.shape!=x[i].shape:

print("Shape of W and X dosn't match", w.shape,x[i].shape)

sys.exit()

sigmoid[i]=1/(1+np.exp(-(np.dot(w,x[i])+b)))

return sigmoid

def dmodel_w(x,theta):

w,b=theta

return x

def dmodel_b(x,theta):

w,b=theta

return 1.

def cost(x,theta,y):

w,b=theta

cf= -y*np.log(model(x,theta))-(1-y)*np.log(1-model(x,theta))

return np.sum(cf)/np.shape(x)[0]

def dcost_w(x,theta,y):

w,b=theta

if np.isscalar(w):

w=np.array([w])

elif isinstance(w,tuple):

w=np.array(w)

dcost_w_result=np.zeros(w.shape)

for wi in range(len(w)):

dcost_w_result[wi]=np.sum((model(x,theta)-y)*dmodel_w(x,theta)[:,wi])/len(x)

return dcost_w_result

def dcost_b(x,theta,y):

return np.sum((model(x,theta)-y)*dmodel_b(x,theta))/len(x)

def compute_gradient(x,theta,y):

return dcost_w(x,theta,y),dcost_b(x,theta,y)

np.set_printoptions(precision=2)

def gradient_decent(x,y,theta,alpha,niter):

w,b=theta

if np.isscalar(w):

w=np.array(w)

elif isinstance(w, tuple):

w=np.array(w)

if theta[1]>0: #constraining parameters

b=-theta[1]

cost_i=np.zeros(niter)

for i in np.arange(niter):

if i>1:

if np.abs((cost_i[i]-cost_i[i-1])/cost_i[i])<0.05:

alpha/=2

dcw,dcb= compute_gradient(x,theta,y)

w = w-alpha*dcw

b = b-alpha*dcb

theta=w,b

cost_i[i]=cost(x,theta,y)

if i>1:

if cost_i[i]>cost_i[i-1]:

alpha/=2

#print(cost_i[i],alpha)

#print(theta)

return cost_i,theta

niter=10000

Win=np.array([2.,3.])

Bin=1.

alpha=0.5

theta_in=Win,Bin

grad_dec_result,theta_f=gradient_decent(x_train,y_train,theta_in,alpha,niter)

wf,bf=theta_f

print(wf,bf,grad_dec_result[-1])

plt.figure(figsize=(8,4))

ax=plt.subplot(121)

plt.plot(np.arange(niter),grad_dec_result,".")

plt.yscale("log")

plt.xlabel("No of steps")

plt.ylabel("Cost function")

plt.ylim(bottom=0.01)

ax=plt.subplot(1,2,2)

#plt.plot(x_train, model(x_train,theta_f), c = "g",label="Predcited model")

ax.plot((-bf/wf[0],0),(0,-bf/wf[1]),label="Predicted model")

pos=y_train>0.5

neg=y_train<0.5